This is the blog section.

Files in these directories will be listed in reverse chronological order.

This is the multi-page printable view of this section. Click here to print.

This is the blog section.

Files in these directories will be listed in reverse chronological order.

The v0.11.1-alpha release introduces retention policies for accelerated datasets, native Windows installation support, and integration of catalog and schema settings for the Databricks Spark connector. Several bugs have also been fixed for improved stability.

Retention Policies for Accelerated Datasets: Automatic eviction of data from accelerated time-series datasets when a specified temporal column exceeds the retention period, optimizing resource utilization.

Windows Installation Support: Native Windows installation support, including upgrades.

Databricks Spark Connect Catalog and Schema Settings: Improved translation between DataFusion and Spark, providing better Spark Catalog support.

refresh_sql and manual refresh to e2e tests by @sgrebnov in https://github.com/spiceai/spiceai/pull/1125spice dataset configure by @ewgenius in https://github.com/spiceai/spiceai/pull/1140spice upgrade on Windows by @sgrebnov in https://github.com/spiceai/spiceai/pull/1155Full Changelog: https://github.com/spiceai/spiceai/compare/v0.11.0-alpha...v0.11.1-alpha

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

The Spice v0.11-alpha release significantly improves the Databricks data connector with Databricks Connect (Spark Connect) support, adds the DuckDB data connector, and adds the AWS Secrets Manager secret store. In addition, enhanced control over accelerated dataset refreshes, improved SSL security for MySQL and PostgreSQL connections, and overall stability improvements have been added.

DuckDB data connector: Use DuckDB databases or connections as a data source.

AWS Secrets Manager Secret Store: Use AWS Secrets Managers as a secret store.

Custom Refresh SQL: Specify a custom SQL query for dataset refresh using refresh_sql.

Dataset Refresh API: Trigger a dataset refresh using the new CLI command spice refresh or via API.

Expanded SSL support for Postgres: SSL mode now supports disable, require, prefer, verify-ca, verify-full options with the default mode changed to require. Added pg_sslrootcert parameter for setting a custom root certificate and the pg_insecure parameter is no longer supported.

Databricks Connect: Choose between using Spark Connect or Delta Lake when using the Databricks data connector for improved performance.

Improved SSL support for Postgres: ssl mode now supports disable, require, prefer, verify-ca, verify-full options with default mode changed to require.

Added pg_sslrootcert parameter to allow setting custom root cert for postgres connector, pg_insecure parameter is no longer supported as redundant.

Internal architecture refactor: The internal architecture of spiced was refactored to simplify the creation data components and to improve alignment with DataFusion concepts.

@edmondop’s first contribution github.com/spiceai/spiceai/pull/1110!

NULL values by @gloomweaver in https://github.com/spiceai/spiceai/pull/1067NULL values for NUMERIC by @gloomweaver in https://github.com/spiceai/spiceai/pull/1068spice refresh CLI command for dataset refresh by @sgrebnov in https://github.com/spiceai/spiceai/pull/1112TEXT and DECIMAL types support and properly handling NULL for MySQL by @gloomweaver in https://github.com/spiceai/spiceai/pull/1067DATE and TINYINT types support for MySQL by @ewgenius in https://github.com/spiceai/spiceai/pull/1065ssl_rootcert_path parameter for MySql data connector by @ewgenius in https://github.com/spiceai/spiceai/pull/1079LargeUtf8 support and explicitly passing the schema to data accelerator SqlTable by @phillipleblanc in https://github.com/spiceai/spiceai/pull/1077pg_insecure parameter support from Postgres by ewgenius in https://github.com/spiceai/spiceai/pull/1081Full Changelog: https://github.com/spiceai/spiceai/compare/v0.10.2-alpha...v0.11.0-alpha

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

Announcing the release of Spice v0.10.2-alpha (Apr 9, 2024)! 🔥

The v0.10.2-alpha release adds the MySQL data connector and makes external data connections more robust on initialization.

MySQL data connector: Connect to any MySQL server, including SSL support.

Data connections verified at initialization: Verify endpoints and authorization for external data connections (e.g. databricks, spice.ai) at initialization.

show tables; parsing in the Spice SQL repl.lookback_size (& improve SpiceAI’s ModelSource) by @Jeadie in https://github.com/spiceai/spiceai/pull/1016Full Changelog: https://github.com/spiceai/spiceai/compare/v0.10.1-alpha...v0.10.2-alpha

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

Announcing the release of Spice v0.10.1-alpha! 🔥

The v0.10.1-alpha release focuses on stability, bug fixes, and usability by improving error messages when using SQLite data accelerators, improving the PostgreSQL support, and adding a basic Helm chart.

Improved PostgreSQL support for Data Connectors TLS is now supported with PostgreSQL Data Connectors and there is improved VARCHAR and BPCHAR conversions through Spice.

Improved Error messages Simplified error messages from Spice when propagating errors from Data Connectors and Accelerator Engines.

Spice Pods Command The spice pods command can give you quick statistics about models, dependencies, and datasets that are loaded by the Spice runtime.

Spice.ai can be deployed to Kubernetes using Helm. Here’s a quick guide to get started:

Step 1. (Optional) Start a local kind cluster:

go install sigs.k8s.io/[email protected]

kind create cluster

Step 2. Install Spice in your Kubernetes cluster using Helm:

helm repo add spiceai https://helm.spiceai.org

helm install spiceai spiceai/spiceai

Step 3. Verify that the Spice pods are running:

kubectl get pods

kubectl logs deploy/spiceai

Step 4. Run the Spice SQL REPL inside the running pod:

kubectl exec -it deploy/spiceai -- spiced --repl

Learn more about deploying Spice.ai to Kubernetes

spice login in environments with no browser. (https://github.com/spiceai/spiceai/pull/994)spice pods Returns incorrect counts. (https://github.com/spiceai/spiceai/pull/998)Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

TL;DR: We’ve rebuilt Spice.ai OSS from the ground up in Rust, as a unified SQL query interface and portable runtime to locally materialize, accelerate, and query datasets sourced from any database, data warehouse or data lake. Learn more at github.com/spiceai/spiceai.

In September, 2021, we introduced Spice.ai OSS as a runtime for building AI-driven applications using time-series data.

We quickly ran into a big problems in making these applications work… data, the fuel for intelligent software, was painfully difficult to access, operationalize, and use, not only in machine learning, but also in web frontends, backend applications, dashboards, data pipelines, and notebooks. And we had to make hard tradeoffs between cost and query performance.

We felt this pain every day building 100TB+ scale data and AI systems for the Spice.ai Cloud Platform. So we took our learnings and infused them back into Spice.ai OSS with the capabilities we wished we had.

We rebuilt Spice.ai OSS from the ground up in Rust, as a unified SQL query interface and portable runtime to locally materialize, accelerate, and query data tables sourced from any database, data warehouse or data lake.

Spice is a fast, lightweight (< 150Mb), single-binary, designed to be deployed alongside your application, dashboard, and within your data or machine learning pipelines. Spice federates SQL query across databases (MySQL, PostgreSQL, etc.), data warehouses (Snowflake, BigQuery, etc.) and data lakes (S3, MinIO, Databricks, etc.) so you can easily use and combine data wherever it lives. Datasets, declaratively defined, can be materialized and accelerated using your engine of choice, including DuckDB, SQLite, PostgreSQL, and in-memory Apache Arrow records, for ultra-fast, low-latency query. Accelerated engines run in your infrastructure giving you flexibility and control over price and performance.

The next-generation of Spice.ai OSS enables:

Better applications. Accelerate and co-locate data with frontend and backend applications, for high concurrent queries, serving more users with faster page loads and data updates. Try the CQRS sample app.

Snappy dashboards, analytics, and BI. Faster, more responsive dashboards without massive compute costs. Spice supports Arrow Flight SQL (JDBC/ODBC/ADBC) for connectivity with Tableau, Looker, PowerBI, and more. Watch the Apache Superset with Spice demo.

Faster data pipelines, machine learning training and inference. Co-locate datasets with pipelines where the data is needed to minimize data-movement and improve query performance. Predict hard drive failure with the SMART data demo.

Easily query many data sources. Federated SQL query across databases, data warehouses, and data lakes using Data Connectors.

Spice is open-source, Apache 2.0 licensed, and is built using industry-leading technologies including Apache DataFusion, Arrow, and Arrow Flight SQL. We’re launching with several built-in Data Connectors and Accelerators and Spice is extensible so more will be added in each release. If you’re interested in contributing, we’d love to welcome you to the community!

You can download and run Spice in less than 30 seconds by following the quickstart at github.com/spiceai/spiceai.

Spice, rebuilt in Rust, introduces a unified SQL query interface, making it simpler and faster to build data-driven applications. The lightweight Spice runtime is easy to deploy and makes it possible to materialize and query data from any source quickly and cost-effectively. Applications can serve more users, dashboards and analytics can be snappier, and data and ML pipelines finish faster, without the heavy lifting of managing data.

For developers this translates to less time wrangling data and more time creating innovative applications and business value.

Check out and star the project on GitHub!

Thank you,

Phillip

Announcing the release of Spice v0.10-alpha! 🧙♂️

The Spice.ai v0.10-alpha release focused on additions and updates to improve stability, usability, and the overall Spice developer experience.

Public Bucket Support for S3 Data Connector: The S3 Data Connector now supports public buckets in addition to buckets requiring an access id and key.

JDBC-Client Connectivity: Improved connectivity for JDBC clients, like Tableau.

User Experience Improvements:

spice login postgres command, streamlining the process for connecting to PostgreSQL databases.Grafana Dashboard: Improving the ability to monitor Spice deployments, a standard Grafana dashboard is now available.

spice login postgres commandspice status with dataset metricsshow tables outputSpice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

Announcing the release of Spice v0.9.1-alpha! 🧙♂️

The v0.9.1 release focused on stability, bug fixes, and usability by adding spice CLI commands for listing Spicepods (spice pods), Models (spice models), Datasets (spice datasets), and improved status (spice status) details. In addition, the Arrow Flight SQL (flightsql) data connector and SQLite (sqlite) data store were added.

FlightSQL data connector: Arrow Flight SQL can now be used as a connector for federated SQL query.

SQLite data backend: SQLite can now be used as a data store for acceleration.

flightsql).sqlite).spice pods, spice status, spice datasets, and spice models CLI commands.GET /v1/spicepods API for listing loaded Spicepods.spiced Docker CI build and release.linux/arm64 binary build.spice sql REPL panics when query result is too large. (https://github.com/spiceai/spiceai/pull/875)--access-secret in spice s3 login. (https://github.com/spiceai/spiceai/pull/894)Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

Announcing the release of Spice v0.9-alpha! 🧙♂️

The v0.9 release adds several data connectors including the Spice data connector for the ability to connect to other Spice instances. Improved observability for the Spice runtime has been added with the new /metrics endpoint for monitoring deployed instances.

Arrow Flight SQL endpoint: The Arrow Flight endpoint now supports Flight SQL, including JDBC, ODBC, and ADBC enabling database clients like DBeaver or BI applications like Tableau to connect to and query the Spice runtime.

Spice.ai data connector: Use other Spice runtime instances as data connectors for federated SQL query across Spice deployments and for chaining Spice runtimes.

Keyring secret store: Use the operating system native credential store, like macOS keychain for storing secrets used by the Spice runtime.

PostgreSQL data connector: PostgreSQL can now be used as both a data store for acceleration and as a connector for federated SQL query.

Databricks data connector: Databricks as a connector for federated SQL query across Delta Lake tables.

S3 data connector: S3 as a connector for federated SQL query across Parquet files stored in S3.

Metrics endpoint: Added new /metrics endpoint for Spice runtime observability and monitoring with the following metrics:

- spiced_runtime_http_server_start counter

- spiced_runtime_flight_server_start counter

- datasets_count gauge

- load_dataset summary

- load_secrets summary

- datasets/load_error counter

- datasets/count counter

- models/load_error counter

- models/count counter

keyring).postgres).spiceai).databricks) - Delta Lake support.s3) - Parquet support./v1/models API./v1/status API./metrics API.Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

Announcing the release of Spice v0.8-alpha! 🏹

This is a minor release that builds on the new Rust-based runtime, adding stability and a preview of new features for the first major release.

Secrets management: Spice 0.8 runtime can now configure and retrieve secrets from local environment variables and in a Kubernetes cluster.

Data tables can be locally accelerated using PostgreSQL

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

Announcing the release of Spice v0.7-alpha! 🏹

Spice v0.7-alpha is an all new implementation of Spice written in Rust. The Spice v0.7 runtime provides developers with a unified SQL query interface to locally accelerate and query data tables sourced from any database, data warehouse, or data lake.

Learn more and get started in minutes with the updated Quickstart in the repository README!

DataFusion SQL Query Engine: Spice v0.7 leverages the Apache DataFusion query engine to provide very fast, high quality SQL query across one or more local or remote data sources.

Data tables can be locally accelerated using Apache Arrow in-memory or by DuckDB.

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved.

In February, we announced Spice.ai OSS v0.6 with its data processing and transport completely rebuilt upon Apache Flight. This enables Spice.ai OSS to scale to datasets 10-100 times larger and brings Spice.ai into the Apache Arrow ecosystem paving the way for integrations with many popular projects, like Apache Parquet, pandas and big data systems like Hive, Drill, Spark, Snowflake, BigQuery, and many more.

In Spice.ai OSS v0.6.1 we announced a new big data system integration… our own, Spice.xyz!

Spice.xyz is data and AI infrastructure for web3.

It’s web3 data made easy. Insanely fast and purpose designed for applications and ML.

Spice.xyz delivers data in Apache Arrow format, over high-performance Apache Arrow Flight APIs to your application, notebook, ML pipeline, and of course, to the Spice.ai runtime.

With Spice.ai OSS v0.6.1, a new Apache Arrow Flight data connector was made available, creating a high-performance bulk-data transport directly into the Spice.ai ML engine. Coupled with Spice.xyz, developers can quickly and easily build web3 data-driven applications that learn and adapt using Spice.ai.

To read the announcement post for Spice.xyz, visit blog.spice.xyz.

Apache Arrow is a specification for an in-memory columnar data format that’s very efficient for analytics operations. Arrow’s zero-copy read semantics coupled with the Flight client-server framework mean extremely fast and efficient data transport and access without serialization overhead. This enables high-performance bulk-data scenarios, critical for data-driven applications and ML. These properties enable an open-architecture based on Apache Arrow, Flight, and Parquet.

Paul Dix, CTO of InfluxData wrote a fantastic post on the Arrow ecosystem and why the future core of InfluxDB is built with Arrow. Sam Crowder also wrote A (Recent) History of Batch Data showing how Arrow is a cornerstone of modern data architecture.

Joining projects like InfluxDB, the core of both Spice.ai OSS and Spice.xyz are built with a foundation of Arrow and Flight. This means they benefit from the same high-performance data operations, they work great with each other and other projects in the ecosystem.

Betting on Arrow in Spice.ai enables exciting new applications because AI needs AI-ready data.

Previously it was difficult to efficiently get bulk data from a provider like Spice.xyz to the Spice.ai engine, but now it’s just a matter of configuring the connection through a few lines of YAML.

Imagine creating an application to trade NFTs. With Spice.xyz, developers can query Ethereum for data relating to NFT trading activity. That data is then delivered with the high-performance Arrow format to the Spice.ai runtime. The application’s Spicepod could learn how to value NFTs based upon it’s trading history and the communities it’s owners have been engaged in. And this could be all done in real-time, something not feasible before.

In addition, using the Arrow Flight connector, other exciting applications are enabled across a ton of domains, like IoT, financial applications, security monitoring, and many more.

To get somewhere you need a goal or destination, a vehicle to get there, and fuel for that vehicle.

When it comes to intelligent, AI-driven applications, Spice.xyz now provides the Spice.ai vehicle with a massive pipeline of web3 data fuel.

The next step is to make it easier for developers to define the destination for the vehicle. Upcoming on the Spice.ai OSS roadmap is the ability for developers to define goals for how the decision-engine should learn. Like learning to maximize measurement “A” or optimizing to a target of “B”.

For example, in web3, this might be to build a client that can learn and adapt to optimize Ethereum Gas Fee prices for token swaps. The goal would be to minimize the gas fee, a problem we experienced first-hand when we built defly.ai. Today you have to encode that goal into your reward function, but our plan is to help do that for you, and all you have to do is tell us the end goal.

Goal-oriented learning applies to many domains, whether it be minimizing fees in crypto or maximizing engagement on a social platform. And personally, we’re excited about the eventual ability to apply Spice.ai and just say “minimize my taxes” :-)

Even for advanced developers, building intelligent apps that leverage AI is still way too hard. Our mission is to make this as easy as creating a modern web page. If that vision resonates with you, join us!

If you’d like to get involved, we’d love to talk. Try out Spice.ai OSS, Spice.xyz, email us “hey,” get in touch on Discord, or reach out on Twitter.

Luke

Announcing the release of Spice.ai v0.6.1-alpha! 🌶

Building upon the Apache Arrow support in v0.6-alpha, Spice.ai now includes new Apache Arrow data processor and Apache Arrow Flight data connector components! Together, these create a high-performance bulk-data transport directly into the Spice.ai ML engine. Coupled with big data systems from the Apache Arrow ecosystem like Hive, Drill, Spark, Snowflake, and BigQuery, it’s now easier than ever to combine big data with Spice.ai.

And we’re also excited to announce the release of Spice.xyz! 🎉

Spice.xyz is data and AI infrastructure for web3. It’s web3 data made easy. Insanely fast and purpose designed for applications and ML.

Spice.xyz delivers data in Apache Arrow format, over high-performance Apache Arrow Flight APIs to your application, notebook, ML pipeline, and of course through these new data components, to the Spice.ai runtime.

Read the announcement post at blog.spice.ai.

Now built with Go 1.18.

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved. We will also be starting a community call series soon!

Announcing the release of Spice.ai v0.6-alpha! 🏹

Spice.ai now scales to datasets 10-100 larger enabling new classes of uses cases and applications! 🚀 We’ve completely rebuilt Spice.ai’s data processing and transport upon Apache Arrow, a high-performance platform that uses an in-memory columnar format. Spice.ai joins other major projects including Apache Spark, pandas, and InfluxDB in being powered by Apache Arrow. This also paves the way for high-performance data connections to the Spice.ai runtime using Apache Arrow Flight and import/export of data using Apache Parquet. We’re incredibly excited about the potential this architecture has for building intelligent applications on top of a high-performance transport between application data sources the Spice.ai AI engine.

From data connectors, to REST API, to AI engine, we’ve now rebuilt Spice.ai’s data processing and transport on the Apache Arrow project. Specifically, using the Apache Arrow for Go implementation. Many thanks to Matt Topol for his contributions to the project and guidance on using it.

This release includes a change to the Spice.ai runtime to AI Engine transport from sending text CSV over gGPC to Apache Arrow Records over IPC (Unix sockets).

This is a breaking change to the Data Processor interface, as it now uses arrow.Record instead of Observation.

Before v0.6, Spice.ai would not scale into the 100s of 1000s of rows.

| Format | Row Number | Data Size | Process Time | Load Time | Transport time | Memory Usage |

|---|---|---|---|---|---|---|

| csv | 2,000 | 163.15KiB | 3.0005s | 0.0000s | 0.0100s | 423.754MiB |

| csv | 20,000 | 1.61MiB | 2.9765s | 0.0000s | 0.0938s | 479.644MiB |

| csv | 200,000 | 16.31MiB | 0.2778s | 0.0000s | NA (error) | 0.000MiB |

| csv | 2,000,000 | 164.97MiB | 0.2573s | 0.0050s | NA (error) | 0.000MiB |

| json | 2,000 | 301.79KiB | 3.0261s | 0.0000s | 0.0282s | 422.135MiB |

| json | 20,000 | 2.97MiB | 2.9020s | 0.0000s | 0.2541s | 459.138MiB |

| json | 200,000 | 29.85MiB | 0.2782s | 0.0010s | NA (error) | 0.000MiB |

| json | 2,000,000 | 300.39MiB | 0.3353s | 0.0080s | NA (error) | 0.000MiB |

After building on Arrow, Spice.ai now easily scales beyond millions of rows.

| Format | Row Number | Data Size | Process Time | Load Time | Transport time | Memory Usage |

|---|---|---|---|---|---|---|

| csv | 2,000 | 163.14KiB | 2.8281s | 0.0000s | 0.0194s | 439.580MiB |

| csv | 20,000 | 1.61MiB | 2.7297s | 0.0000s | 0.0658s | 461.836MiB |

| csv | 200,000 | 16.30MiB | 2.8072s | 0.0020s | 0.4830s | 639.763MiB |

| csv | 2,000,000 | 164.97MiB | 2.8707s | 0.0400s | 4.2680s | 1897.738MiB |

| json | 2,000 | 301.80KiB | 2.7275s | 0.0000s | 0.0367s | 436.238MiB |

| json | 20,000 | 2.97MiB | 2.8284s | 0.0000s | 0.2334s | 473.550MiB |

| json | 200,000 | 29.85MiB | 2.8862s | 0.0100s | 1.7725s | 824.089MiB |

| json | 2,000,000 | 300.39MiB | 2.7437s | 0.0920s | 16.5743s | 4044.118MiB |

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved. We will also be starting a community call series soon!

Last month in the v0.5-alpha version, a new learning algorithm was added to Spice.ai: Soft Actor-Critic. This is a very popular algorithm in the Reinforcement Learning field. Let’s see what it is and why this is an interesting addition.

The previous article Understanding Q-learning: How a Reward Is All You Need is not necessary but can be helpful to understand this article.

Deepmind first introduced the actor-critic approach in deep learning in a 2016 paper. We can think of this approach as having 2 tasks:

Those tasks will be made by 2 different neural networks or a single network that branches out in 2 heads. The actor is the part that outputs the policy, while the critic outputs the values.

In most cases, this model was proven to perform very well, better than Deep Q-Learning. The actor is trained to prefer actions associated with the best values from the critic. The critic is trained to correctly estimate rewards (current and future ones) of the actions.

Both will improve over time though we have to keep in mind that the critic is unlikely to evaluate all possible actions in the environment as it will only see actions from states that the actor is likely to take (the policy).

This bias of the system toward its policy is important: the algorithm is meant to train on-policy. The duo actor-critic works together: trying to train it with inputs and outputs from another system (humans or even itself in past iterations of its own training) will not work.

Multiple improvements were made to limit the bias of the actor-critic approach but the necessity to train on-policy remains. This is very limiting as being able to train from any experience can be very valuable for time and data efficiency.

Soft Actor-Critic allows an Actor-Critic network to train off-policy. It was introduced in a paper in 2018 and included multiple additions to improve its parent algorithm. The main difference is the introduction of the entropy of the actor outputs during the training phase.

The entropy measures the chaos/order of a system (or uncertainty). If a system always acts the same way, the entropy is minimal. Here the actor’s entropy is maximum if all possible actions have the same weight (same probability) and minimum if the actor always chose only a single action with 100% confidence.

During the training phase, the actor is trained to maintain the entropy of its outputs at a specific value.

The introduction of the entropy changes the goal of the training not only to find the bests output but to keep exploring the other actions. The critic part will be trained on all actions, even if they may occur only in rare cases.

There are other essential parts, such as having 2 critics and being able to output continuous values, but the entropy is the crucial difference in this algorithm’s training and potential.

As we saw above, the Actor-Critic algorithm is known to outperform Deep Q-Learning in most cases. If we also want to leverage previous data (off-policy training), Soft Actor-Critic is a natural choice. This approach is heavier despite better theoretical results, making it more suitable for complex tasks. For simpler tasks, Deep Q-Learning will still be an appealing option for its speed of training and its capability to quickly convergence to a good solution.

We can think of Soft Actor-Critic as a complex machine designed to take actions while keeping a variety of possibilities. Sometimes several options seem equally rewarding: a simpler algorithm would take what it evaluates as the best one even though the margin is small and the precision of its evaluation shouldn’t be enough. This tendency to quickly convergence to a solution has its benefits and inconveniences.

Adding new algorithms is essential to Spice.ai, so the procedure was designed to be straightforward.

Looking a the source code, the code related to training agents is in the ai/src folder. This part of the code uses the python language as most modern AI libraries are distributed in this language.

In this folder, every agent is in the algorithms folder, and each has its subfolder. There is an agent_interface file that defines the main class that the different agents should inherit from and a factory script responsible for creating instances of an agent from a given algorithm name.

Adding a new agent is simple:

algorithmsalgorithm_id, name, and docs_link (see other json as an example) in the folderSpiceAIAgent defined in the agent_interface scriptfactory script to instantiate the new implementation when its name is called.For the new agent, inheriting from the main SpiceAIAgent class, 5 functions need to be implemented:

Soft Actor-Critic is a fascinating algorithm that performs well in complex environments. We now support Soft Actor Critic in Spice.ai, which is another step forward in constantly improving the performance of the AI engine. Additionally, we’ll continue improving existing algorithms and adding newer ones over time. We designed the platform for ease of implementation and experimentation so if you’d like to try building your own agent, you can get the source code on Github and contribute to the platform. Say hi on Discord, reach out on Twitter or email us.

I hope you enjoy this post and something new.

Corentin

AI unlocks a new generation of intelligent applications that learn and adapt from data. These applications use machine learning (ML) to out-perform traditionally developed software. However, the data engineering required to leverage ML is a significant challenge for many product teams. In this post, we’ll explore the three classes of data you need to build next-generation applications and how Spice.ai handles runtime data engineering for you.

While ML has many different applications, one way to think about ML in a real-time application that can adapt is as a decision engine. Phillip discussed decision engines and their potential uses in A New Class of Applications That Learn and Adapt. This decision engine learns and informs the application how to operate. Of course, applications can and do make decisions without ML, but a developer normally has to code that logic. And the intelligence of that code is fixed, whereas ML enables a machine to constantly find the appropriate logic and evolve the code as it learns. For ML to do this, it needs three classes of data.

We don’t want any decision, though. We want high-quality, informed decisions. If you consider making higher quality, informed decisions over time, you need three classes of information. These classes are historical information, real-time or present information, and the results of your decisions.

Especially recently, stock or crypto trading is something many of us can relate to. To make high-quality, informed investing decisions, you first need general historical information on the price, security, financials, industry, previous trades, etc. You study this information and learn what might make a good investment or trade.

Second, you need a real-time updated stream of data as it happens to make a decision. If you were stock trading, this information might be the stock price on the day or hour you want to make the trade. You need to apply what you learned from historical data to the current information to decide what trade to place.

Finally, if we’re going to make better decisions over time, we need to capture and learn from the results of those decisions. Whether you make a great or poor trade, you want to incorporate that experience into your historical learning.

Using all three data classes together results in higher quality decisions over time. Broad data across these classes are useful, and we could make some nice trades with that. Still, we can make an even higher quality trading decision with personal context. For example, we may want to consider the individual tax consequences or risk level of the trade for our situation. So each of these classes also comes with global or local variants. We combine global information, like what worked well for everyone, and local experience, what worked well for us and our situation, to make the best, overall informed decision.

Consider how you would capture these three data classes and make them available to both the application and ML in the trading example. This data engineering can be a pretty big challenge.

First, you need a way to gather and consume historical information, like stock prices, and keep that updated over time. You need to handle streaming constantly updated real-time data to make runtime decisions on how to operate. You need to capture and match the decisions you make and feed that back into learning. And finally, you need a way to provide personal or local context, like holding off on sell trades until next year, to stay within a tax threshold, or identifying a pattern you like to trade. If all this wasn’t enough, as we learned from Phillip’s AI needs AI-ready data post, all three data classes need to be in a format that ML can use.

If you can afford a data or ML team, they may do much of this for you. However, this model starts to look quite waterfall-like and is not suited well to applications that want to learn and adapt in real-time. Like a waterfall approach, you would provide requirements to your data team, and they would do the data engineering required to provide you with the first two classes of data, historical and real-time. They may give you ML-ready data or train an ML model for you. However, there is often a large latency to apply that data or model in your application and a long turn-around time if it does not meet your requirements. In addition, to capture the third class of data, you would need to capture and send the results of the decisions your application made as a result of using those models back to the data team to incorporate in future learning. This latency through the data, decision-making, learning, and adaptation process is often infeasible for a real-world app.

And, if you can’t afford a data team, you have to figure out how to do all that yourself.

Modern software engineering practices have favored agile methodologies to reduce time to learn and adapt applications to customer and business needs. Spice.ai takes inspiration from agile methods to provide developers with a fast, iterative development cycle.

Spice.ai provides mechanisms for making all three classes of data available to both the application and the decision engine. Developers author Spicepods declaring how data should be captured, consumed, and made ML-ready so that all three classes are consistent and ML available.

The Spice.ai runtime exposes developer-friendly APIs and data connectors for capturing and consuming data and annotating that data with personal context. The runtime generates AI-ready data for you and makes it available directly for ML. These APIs also make it easy to capture application decisions and incorporate the resulting learning.

The Spice.ai approach short circuits the traditional waterfall-like data process by keeping as much data as possible application local instead of round-tripping through an external pipeline or team, especially valuable for real-time data. The application can learn and adapt faster by reducing the latency of decision consequences to learning.

Spice.ai enables personalized learning from personal context and experiences through the interpretations mechanism. Interpretations allow an application to provide additional information or an “interpretation” of a time range as input to learning. The trading example could be as simple as labeling a time range as a good time to buy or providing additional contextual information such as tax considerations, etc. Developers can also use interpretations to record the results of decisions with more context than what might be available in the observation space. You can read more about Interpretations in the Spice.ai docs.

While Spice.ai focuses on ensuring consistent ML-ready data is available, it does not replace traditional data systems or teams. They still have their place, especially for large historical datasets, and Spice.ai can consume data produced by them. Where possible, especially for application and real-time data, Spice.ai keeps runtime data local to create a virtuous cycle of data from the application to the decision engine and back again, enabling faster and more agile learning and adaption.

In summary, to build an intelligent application driven from AI recommended decisions, a significant amount of data engineering can be required to learn, make decisions, and incorporate the results. The Spice.ai runtime enables you as a developer to focus on consuming those decisions and tuning how the AI engine should learn rather than the runtime data engineering.

The potential of the next generation of intelligent applications to improve the quality of our lives is very exciting. Using AI to help applications make better decisions, whether that be AI-assisted investing, improving the energy efficiency of our homes and buildings, or supporting us in deciding on the most appropriate medical treatment, is very promising.

Even for advanced developers, building intelligent apps that leverage AI is still way too hard. Our mission is to make this as easy as creating a modern web page. If that vision resonates with you, join us!

If you want to get involved, we’d love to talk. Try out Spice.ai, email us “hey,” get in touch on Discord, or reach out on Twitter.

Luke

A new class of applications that learn and adapt is becoming possible through machine learning (ML). These applications learn from data and make decisions to achieve the application’s goals. In the post Making apps that learn and adapt, Luke described how developers integrate this ability to learn and adapt as a core part of the application’s logic. You can think of the component that does this as a “decision engine.” This post will explore a brief history of decision engines and use-cases for this application class.

The idea to make intelligent decision-making applications is not new. Developers first created these applications around the 1970s1, and they are some of the earliest examples of using artificial intelligence to solve real-world problems.

The first applications used a class of decision engines called “expert systems.” A distinguishing trait of expert systems is that they encode human expertise in rules for decision-making. Domain experts created combinations of rules that powered decision-making capabilities.

Some uses of expert systems include:

However, the resources required to build expert systems make employing them infeasible for many applications2. They often need a significant time and resource investment to capture and encode expertise into complex rule sets. These systems also do not automatically learn from experience, relying on experts to write more rules to improve decision-making.

With the advent of modern deep-learning techniques and the ability to access significantly more data, it is now possible for the computer, not only the developer, to learn and encode the rules to power a decision engine and improve them over time. The vision for Spice.ai is to make it easy for developers to build this new class of applications. So what are some use-cases for these applications?

Today: The air conditioning system for an office building runs on a fixed schedule and is set to a fixed temperature in business hours, only adjusting using in-room sensor data, if at all. This behavior potentially over cools at business close as the outside temperature lowers and the building starts vacating.

With Spice.ai: Using Spice.ai, the application combines time-series data from multiple data sources, including the time of day and day of the week, building/room occupancy, and outside temperature, energy consumption, and pricing. The A/C controller application learns how to adjust the air conditioning system as the room naturally cools towards the end of the day. As the occupancy decreases, the decision engine is rewarded for maintaining the desired temperature and minimizing energy consumption/cost.

Today: Customers order food delivery with a mobile app. When the order is ready to be picked up from the restaurant, the order is dispatched to a delivery driver by a simple heuristic that chooses the nearest available driver. As the app gets more popular with customers and the number of restaurants, drivers, and customers increases, the heuristic needs to be constantly tuned or supplemented with human operators to handle the demand.

With Spice.ai: The application learns which driver to dispatch to minimize delivery time and maximize customer star ratings. It considers several factors from data, including patterns in both the restaurant and driver’s order histories. As the number of users, drivers, and customers increases over time, the app adapts to keep up with the changing patterns and demands of the business.

Today: When trading stocks through a broker like Fidelity or TD Ameritrade, your broker will likely route your order to an exchange like the NYSE. And in the emerging world of crypto, you can place your trade or swap directly on a decentralized exchange (DEX) like Uniswap or Pancake Swap. In both cases, the routing of orders is likely to be either a form of traditional expert system based upon rules or even manually routed.

With Spice.ai: A smart order routing application learns from data such as pending transactions, time of day, day of the week, transaction size, and the recent history of transactions. It finds patterns to determine the most optimal route or exchange to execute the transaction and get you the best trade.

A new class of applications that can learn and adapt are made possible by integrating AI-powered decision engines. Spice.ai is a decision engine that makes it easy for developers to build these applications.

If you’d like to partner with us in creating this new generation of intelligent decision-making applications, we invite you to join us on Discord, reach out on Twitter or email us.

Phillip

Russell, Stuart; Norvig, Peter (1995). Artificial Intelligence: A Modern Approach. Simon & Schuster. pp. 22–23. ISBN 978-0-13-103805-9. ↩︎

Kendal, S. L., & Creen, M. (2007). An introduction to knowledge engineering. London: Springer. ISBN 978-1-84628-475-5 ↩︎

Announcing the release of Spice.ai v0.5.1-alpha! 📈

This minor release builds upon v0.5-alpha adding the ability to start training from the dashboard plus support for monitoring training runs with TensorBoard.

A “Start Training” button has been added to the pod page on the dashboard so that you can easily start training runs from that context.

Training runs can now be started by:

/api/v0.1/pods/{pod name}/trainTensorBoard monitoring is now supported when using DQL (default) or the new SACD learning algorithms that was announced in v0.5-alpha.

When enabled, TensorBoard logs will automatically be collected and a “Open TensorBoard” button will be shown on the pod page in the dashboard.

Logging can be enabled at the pod level with the training_loggers pod param or per training run with the CLI --training-loggers argument.

Support for VPG will be added in v0.6-alpha. The design allows for additional loggers to be added in the future. Let us know what you’d like to see!

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved. We will also be starting a community call series soon!

There are two general ways to train an AI to match a given expectation: we can either give it the expected outputs (commonly named labels) for differents inputs; we call this supervised learning. Or we can provide a reward for each output as a score: this is reinforcement learning (RL).

Supervised learning works by tweaking all the parameters (weights in neural networks) to fit the desired outputs, expecting that given enough input/label pairs the AI will find common rules that generalize for any input.

Reinforcement learning’s reward is often provided from a simple function that can score any output: we don’t know what specific output would be best, but we can recognize how good the result is. In this latter statement there are two underlying concepts we will address in this post:

Those questions are already mostly answered, and many algorithms deal with those topics. Our journey here will be to understand how we tackle those questions and end up with a beautiful formula that is at the core of modern approaches of RL:

The vast majority, if not all, of modern RL algorithms are based on the principles of Q-learning: the idea is to evaluate a ‘reward expectation’ for each possible action. If we can have a good evaluation, we could maximize the reward by choosing actions with the maximum evaluated rewards. The function giving this expected reward is named Q. For now, we will assume we can have a reward for any action.

The t indices show that the state and action aren’t constant and will vary, usually with time/action taken. On the other hand, the Q function and the reward function r are unique functions that ideally return the ’expected reward’ for any (state, action) pairs.

For now, we will assume we can have a reward that gives an objective and perfect evaluation of each state/action.

We know that actions’ outcomes (rewards) will vary depending on the current state we are in, otherwise the problem would be trivial to solve. If the states that are relevant to our actions can be numbered, a simple way would be to build a table with all the possible states/action pairs. There are different ways to build such a table depending on how we can interact with our environment. Eventually, we would have a good ‘map’ to guide us to do the best actions.

When the number of variables of the environment relevant to our actions/rewards becomes too large, the number of possible states grows quickly. It doesn’t take a lot of possible parameters to make the Q-table approach unfeasible. Neural networks are known to work very nicely and efficiently in high dimensionality (with many input variables). They also generalize well, so the idea in Deep Q-Learning is to use a neural network to predict the different Q values for each action given a state.

In this case, we do not need to give the state/action pairs but only the state, as the neural network would exhaustively return all the Q values associated with each action. Outputting all actions’ Q value is a common method as the general cases have a complex environment but a smaller number of possible actions.

This method works very well. It is similar to supervised learning with states as inputs and rewards as labels. We assumed so far that we had a reward for each action, and we chose the next action with the best reward (called a greedy policy). In many cases this is not enough: even if an action would yield the best reward at a given state, this may affect the next state so that we wouldn’t optimize the reward in the long term. Also, if we can’t have a reward for each action, we usually give 0 as a reward. We will not be able to choose the right action if they affect later states despite not yielding different rewards at the current state.

The sparsity of rewards or the long-term calculation of total reward (non-greedy policies) leads us to diverge from supervised learning and learn potential future rewards.

TD-learning is a clever way to account for potential future value without knowing them yet. TD is a model-free class of algorithms: it does not simulate future states. The main idea is to consider all the rewards of a sequence of actions to give a better value than just the reward of the next action.

We can, for instance, sum all the future rewards:

Mathematically this can be written as:

This is named TD(0): the simplest form of TD method, accumulating all the rewards.

We could try different trajectories (sequence of actions) and retrospectively get the final reward for each action, but this has 2 drawbacks: the environment is usually too vast, and the sequence of actions might not even have a definite end. Also, such exhaustive methods might not be very efficient. Instead, we can evaluate the ‘value’ of the next state overall, like the maximum of all its possible rewards (direct reward), and add this value to the reward of a given action.

If a state can have different branches, we can select the best one, and this would be our policy, the way we choose actions. This simple form of taking the maximum is called the ‘greedy’ policy.

This can be written down as:

The expected value notation is defined as:

For a greedy policy the probabilities p would all be set to 0 but the one associated with the highest return to 1 (in case of equality between n actions, we would attribute ‘1/n’ as probabilities to get the same expected value).

The expected reward can be replaced by the Q function we used earlier, which now can be denominated to be specific to our chosen policy (named π):

We previously discussed the problem of not being able to go through all the states exhaustively and that the evaluation of the Q value from a neural network could help. We want to use the TD method to have a better value estimation that will consider potential future rewards.

The TD(0) method is elegant as we can, in fact, only use the next state’s expected value instead of all future ones. The idea is that with successive evaluations, we build a chain of dependencies as each states’ value depends on the next one.

We can see that the greedy policy would work even with null rewards in the trajectory. We can explicit our greedy policy, going back to use Q value instead of the state value V:

We need to fix a problem: if a trajectory grows too long or never ends, a state value can potentially grow indefinitely. To counter that, we can add a discount factor (originally named lambda, usually refer as gamma in Q-learning) for the next state’s value:

Notice that we simplify the reward notation for clarity.

To avoid exploding values, this discount has to be between 0 and 1 (strictly below 1). We can think about it as giving more importance to the direct reward than the future ones. As the contribution to the latter reward decrease, the chain of action can grow without the calculated value growing. If the reward has an upper limit, the value will also be bounded.

The sparsity of rewards is also solved: giving only a positive reward after many non-rewarding steps will create smooth values for the intermediate states. Any reward, positive or negative, will diffuse its value to the neighbor states.

Finally, as we train a neural network to estimate the Q function, we need to update its target with successive iteration. We cannot fully trust the estimator (a neural network here) to give the correct value, so we introduce a learning rate to update the target smoothly.

That is it! We now understand all the parts of this formula. Over multiple training steps with different sates, the training should find a good average Q function. While training, the estimator uses its own output to train itself (commonly referred to as bootstrapping): it is like it is chasing itself. Bootstrapping can lead to instability in the training process. There are many additional methods to help against such instability.

From giving rewards, sparse or not, binary or fine-grained, we have a smooth space of values for all our states/actions so the AI can follow a greedy policy to the best outcome.

This way of training is not a silver bullet and there is no guarantee that the AI will find a correlation from the information given as state to the returned reward.

We can see how our rewards are used to train AI’s policies using Q-learning. By understanding the many iterations required and the bootstrapping issues, we can help our AI by carefully giving relevant state information and reward:

We didn’t see how the AI’s algorithm can explore different actions given an environment here. Spice.ai’s technology focuses exclusively on off-policy training where we only have past data and cannot interact with the environment. RL is a vast topic and currently quickly growing. Robotics is a fantastic field of application; many other areas are yet to be explored with such a technology. We hope to push forward the technology and its field of application with our platform.

If you’d like to partner with us on the mission of making new applications by leveraging RL, we invite you to discuss with us on Discord, reach out on Twitter or email us.

I hope you enjoy this post and learn new things.

Corentin

We are excited to announce the release of Spice.ai v0.5-alpha! 🥇

Highlights include a new learning algorithm called “Soft Actor-Critic” (SAC), fixes to the behavior of spice upgrade, and a more consistent authoring experience for reward functions.

If you are new to Spice.ai, check out the getting started guide and star spiceai/spiceai on GitHub.

The addition of the Soft Actor-Critic (Discrete) (SAC) learning algorithm is a significant improvement to the power of the AI engine. It is not set as the default algorithm yet, so to start using it pass the --learning-algorithm sacd parameter to spice train. We’d love to get your feedback on how its working!

With the addition of the reward function files that allow you to edit your reward function in a Python file, the behavior of starting a new training session by editing the reward function code was lost. With this release, that behavior is restored.

In addition, there is a breaking change to the variables used to access the observation state and interpretations. This change was made to better reflect the purpose of the variables and make them easier to work with in Python

| Previous (Type) | New (Type) |

|---|---|

prev_state (SimpleNamespace) | current_state (dict) |

prev_state.interpretations (list) | current_state_interpretations (list) |

new_state (SimpleNamespace) | next_state (dict) |

new_state.interpretations (list) | next_state_interpretations (list) |

The Spice.ai CLI will no longer recommend “upgrading” to an older version. An issue was also fixed where trying to upgrade the Spice.ai CLI using spice upgrade on Linux would return an error.

prev_state and new_state to current_state and next_state to be consistent with the reward function files.spice upgrade command.Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved. We will also be starting a community call series soon!

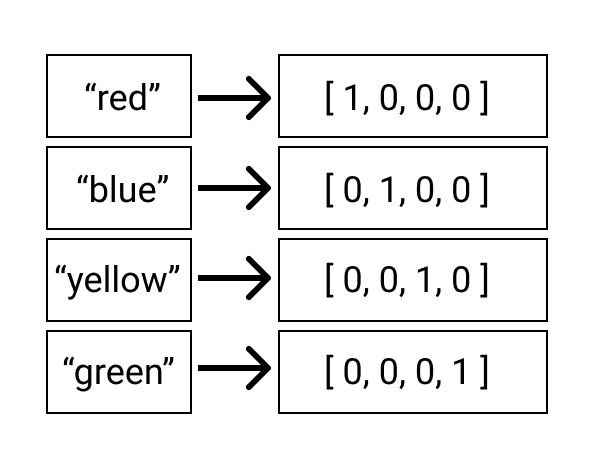

A significant challenge when developing an app powered by AI is providing the machine learning (ML) engine with data in a format that it can use to learn. To do that, you need to normalize the numerical data, one-hot encode categorical data, and decide what to do with incomplete data - among other things.

This data handling is often challenging! For example, to learn from Bitcoin price data, the prices are better if normalized to a range between -1 and 1. Being close to 0 is also a problem because of the lack of precision in floating-point representations (usually under 1e-5).

As a developer, if you are new to AI and machine learning, a great talk that explains the basics is Machine Learning Zero to Hero. Spice.ai makes the process of getting the data into an AI-ready format easy by doing it for you!

You write code with if statements and functions, but your machine only understands 1s and 0s. When you write code, you leverage tools, like a compiler, to translate that human-readable code into a machine-readable format.

Similarly, data for AI needs to be translated or “compiled” to be understood by the ML engine. You may have heard of tensors before; they are simply another word for a multi-dimensional array and they are the language of ML engines. All inputs to and all outputs from the engine are in tensors. You could use the following techniques when converting (or “compiling”) source data to a tensor.

There are excellent tools like Pandas, Numpy, scipy, and others that make the process of data transformation easier. However, most of these tools are Python libraries and frameworks - which means having to learn Python if you don’t know it already. Plus, when building intelligent apps (instead of just doing pure data analysis), this all needs to work on real-time data in production.

The tools mentioned above are not designed for building real-time apps. They are often designed for analytics/data science.

In your app, you will need to do this data compilation in real-time - and you can’t rely on a local script to help process your data. It becomes trickier if the team responsible for the initial training of the machine learning model is not the team responsible for deploying it out into production.

How data is loaded and processed in a static dataset is likely very different from how the data is loaded and processed in real-time as your app is live. The result often is two separate codebases that are maintained by different teams that are both responsible for doing the same thing! Ensuring that those codebases stay consistent and evolve together is another challenge to tackle.

Spice.ai handles the “compilation” of data for you.

You specify the data that your ML should learn from in a Spicepod. The Spice.ai runtime handles the logistics of gathering the data and compiling it into an AI-ready format.

It does this by using many techniques described earlier, such as normalization and one-hot encoding. And because we’re continuing to evolve Spice.ai, our data compilation will only get better over time.

In addition, the design of the Spice.ai runtime naturally ensures that the data used for both the training and real-time cases are consistent. Spice.ai uses the same data-components and runtime logic to produce the data. And not only that, you can take this a step further and share your Spicepod with someone else, and they would be able to use the same AI-ready data for their applications.

Spice.ai handles the process of compiling your data into an AI-ready format in a way that is consistent both during the training and real-time stages of the ML engine. A Spicepod defines which data to get and where to get it. Sharing this Spicepod allows someone else to use the same AI-ready data format in their application.

Building intelligent apps that leverage AI is still way too hard, even for advanced developers. Our mission is to make this as easy as creating a modern web page. If the vision resonates with you, join us!

Our Spice.ai Roadmap is public, and now that we have launched, the project and work are open for collaboration.

If you are interested in partnering, we’d love to talk. Try out Spice.ai, email us “hey,” get in touch on Discord, or reach out on Twitter.

We are just getting started! 🚀

Phillip

In my previous post, Teaching Apps how to Learn with Spicepods, I introduced Spicepods as packages of configuration that describe an application’s data-driven goals and how it should learn from data. To leverage Spice.ai in your application, you can author a Spicepod from scratch or build upon one fetched from the spicerack.org registry. In this post, we’ll walk through the creation and authoring of a Spicepod step-by-step from scratch.

As a refresher, a Spicepod consists of:

We’ll create the Spicepod for the ServerOps Quickstart, an application that learns when to optimally run server maintenance operations based upon the CPU-usage patterns of a server machine.

We’ll also use the Spice CLI, which you can install by following the Getting Started guide or Getting Started YouTube video.

Modern web development workflows often include a file watcher to hot-reload so you can iteratively see the effect of your change with a live preview.

Spice.ai takes inspiration and enables a similar Spicepod manifest authoring experience. If you first start the Spice.ai runtime in your application root before creating your Spicepod, it will watch for changes and apply them continuously so that you can develop in a fast, iterative workflow.

You would normally do this by opening two terminal windows side-by-side, one that runs the runtime using the command spice run and one where you enter CLI commands. In addition, developers would open the Spice.ai dashboard located at http://localhost:8000 to preview changes they make.

The easiest way to create a Spicepod is to use the Spice.ai CLI command: spice init <Spicepod name>. We’ll make one in the ServerOps Quickstart application called serverops.

The CLI saves the Spicepod manifest file in the spicepods directory of your application. You can see it created a new serverops.yaml file, which should be included in your application and be committed to your source repository. Let’s take a look at it.

The initialized manifest file is very simple. It contains a name and three main sections being:

We’ll walk through each of these in detail, and as a Spicepod author, you can always reference the documentation for the Spicepod manifest syntax.

You author and edit Spicepod manifest files in your favorite text editor with a combination of Spice.ai CLI helper commands. We eventually plan to have a VS Code extension and dashboard/portal editing abilities to make this even easier.

To build an intelligent, data-driven application, we must first start with data.

A Spice.ai dataspace is a logical grouping of data with definitions of how that data should be loaded and processed, usually from a single source. A combination of its data source and its name identifies it, for example, nasdaq/msft or twitter/tweets. Read more about Dataspaces in the Core Concepts documentation.

Let’s add a dataspace to the Spicepod manifest to load CPU metric data from a CSV file. This file is a snapshot of data from InfluxDB, a time-series database we like.

We can see this dataspace is identified by its source hostmetrics and name cpu. It includes a data section with a file data connector, the path to the file, and a data processor to know how to process it. In addition, it defines a single measurement usage_idle under the measurements section, which is a measurement of CPU load. In Spice.ai, measurements are the core primitive the AI engine uses to learn and is always numerical data. Spice.ai includes a growing library of community contributable data connectors and data processors you can consist of in your Spicepod to access data. You can also contribute your own.

Finally, because the data is a snapshot of live data loaded from a file, we must set a Spicepod epoch_time that defines the data’s start Unix time.

Now we have a dataspace, called hostmetrics/cpu, that loads CSV data from a file and processes the data into a usage_idle measurement. The file connector might be swapped out with the InfluxDB connector in a production application to stream real-time CPU metrics into Spice.ai. And in addition, applications can always send real-time data to the Spice.ai runtime through its API with a simple HTTP POST (and in the future, using Web Sockets and gRPC).

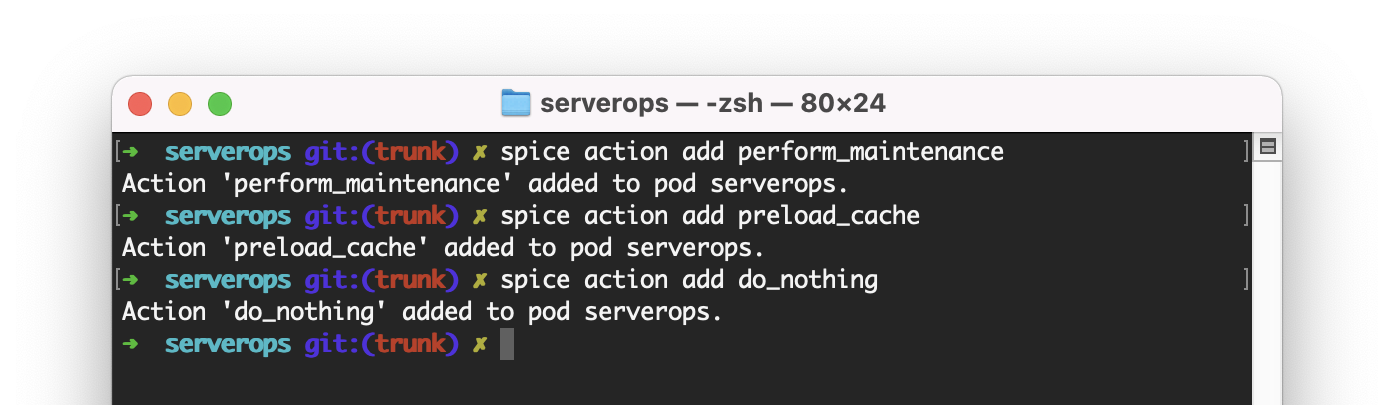

Now that the Spicepod has data, let’s define some data-driven actions so the ServerOps application can learn when is the best time to take them. We’ll add three actions using the CLI helper command, spice action add.

And in the manifest:

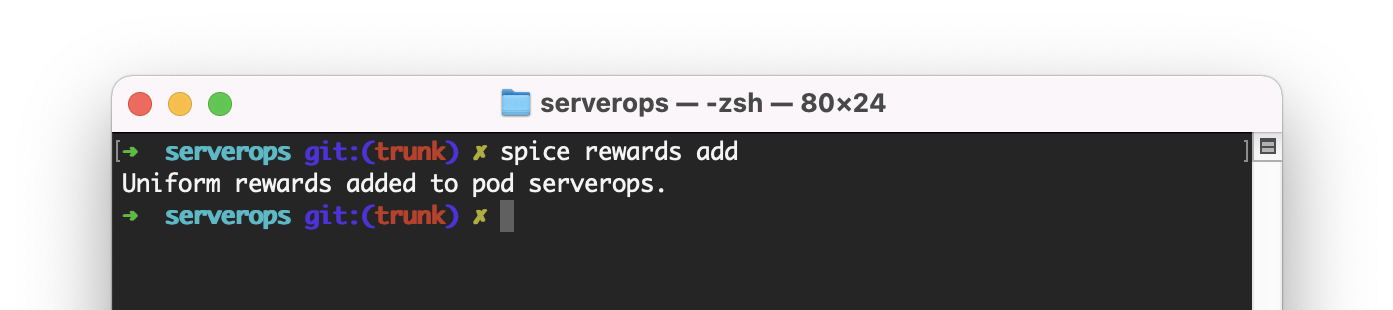

The Spicepod now has data and possible actions, so we can now define how it should learn when to take them. Similar to how humans learn, we can set rewards or punishments for actions taken based on their effect and the data. Let’s add scaffold rewards for all actions using the spice rewards add command.

We now have rewards set for each action. The rewards are uniform (all the same), meaning the Spicepod is rewarded the same for each action. Higher rewards are better, so if we change perform_maintenance to 2, the Spicepod will learn to perform maintenance more often than the other actions. Of course, instead of setting these arbitrarily, we want to learn from data, and we can do that by referencing the state of data at each time-step in the time-series data as the AI engine trains.

The rewards themselves are just code. Currently, we currently support Python code, either inline or in a .py external code file and we plan to support several other languages. The reward code can access the time-step state through the prev_state and new_state variables and the dataspace name. For the full documentation, see Rewards.

Let’s add this reward code to perform_maintenance, which will reward performing maintenance when there is low CPU usage.

cpu_usage_prev = 100 - prev_state.hostmetrics_cpu_usage_idle

cpu_usage_new = 100 - new_state.hostmetrics_cpu_usage_idle

cpu_usage_delta = cpu_usage_prev - cpu_usage_new

reward = cpu_usage_delta / 100

This code takes the CPU usage (100 minus the idle time) deltas between the previous time state and the current time state, and sets the reward to be a normalized delta value between 0 and 1. When the CPU usage is moving from higher cpu_usage_prev to lower cpu_usage_low, its a better time to run server maintenance and so we reward the inverse of the delta. E.g. 80% - 50% = 30% = 0.3. However, if the CPU moves lower to higher, 50% - 80% = -30% = -0.3, it’s a bad time to run maintenance, so we provide a negative reward or “punish” the action.

Through these rewards and punishments and the CPU metric data, the Spicepod will when it is a good time to perform maintence and be the decision engine for the ServerOps application. You might be thinking you could write code without AI to do this, which is true, but handling the variety of cases, like CPU spikes, or patterns in the data, like cyclical server load, would take a lot of code and a development time. Applying AI helps you build faster.

The manifest now has defined data, actions, and rewards. The Spicepod can get data to learn which actions to take and when based on the rewards provided.

If the Spice.ai runtime is running, the Spicepod automatically trains each time the manifest file is saved. As this happens reward performance can be monitored in the dashboard.

Once a training run completes, the application can query the Spicepod for a decision recommendation by calling the recommendations API http://localhost:8000/api/v0.1/pods/serverops/recommendation. The API returns a JSON document that provides the recommended action, the confidence of taking that action, and when that recommendation is valid.

In the ServerOps Quickstart, this API is called from the server maintenance PowerShell script to make an intelligent decision on when to run maintenance. The ServerOps Sample, which uses live data, can be continuously trained to learn and adapt even as the live data changes due to load patterns changing.

The full Spicepod manifest from this walkthrough can be added from spicerack.org using the spice add quickstarts/serverops command.

Leveraging Spice.ai to be the decision engine for your server maintenance application helps you build smarter applications, faster that will continue to learn and adapt over time, even as usage patterns change over time.

Building intelligent apps that leverage AI is still way too hard, even for advanced developers. Our mission is to make this as easy as creating a modern web page. If the vision resonates with you, join us!

Our Spice.ai Roadmap is public, and now that we have launched, the project and work are open for collaboration.

If you are interested in partnering, we’d love to talk. Try out Spice.ai, email us “hey,” get in touch on Discord, or reach out on Twitter.

We are just getting started! 🚀

Luke

Announcing the release of Spice.ai v0.4.1-alpha! ✅

This point release focuses on fixes and improvements to v0.4-alpha. Highlights include AI engine performance improvements, updates to the dashboard observations data grid, notification of new CLI versions, and several bug fixes.

A special acknowledgment to @Adm28, who added the CLI upgrade detection and prompt, which notifies users of new CLI versions and prompts to upgrade.

Overall training performance has been improved up to 13% by removing a lock in the AI engine.

In versions before v0.4.1-alpha, performance was especially impacted when streaming new data during a training run.

The dashboard observations datagrid now automatically resizes to the window width, and headers are easier to read, with automatic grouping into dataspaces. In addition, column widths are also resizable.

When it is run, the Spice.ai CLI will now automatically check for new CLI versions once a day maximum.

If it detects a new version, it will print a notification to the console on spice version, spice run or spice add commands prompting the user to upgrade using the new spice upgrade command.

time_format of hex or prefix with 0x.Spicepods directory, and a resulting error when loading a non-Spicepod file.Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved. We will also be starting a community call series soon!

The Spice.ai project strives to help developers build applications that leverage new AI advances which can be easily trained, deployed, and integrated. A previous blog post introduced Spicepods: a declarative way to create AI applications with Spice.ai technology. While there are many libraries and platforms in the space, Spice.ai is focused on time-series data aligning to application-centric and frequently time-dependent data, and a Reinforcement Learning approach, which can be more developer-friendly than expensive, labeled supervised learning.

This post will discuss some of the challenges and directions for the technology we are developing.

Time series AI has become more popular over recent years, and there is extensive literature on the subject, including time-series-focused neural networks. Research in this space points to the likelihood that there is no silver bullet, and a single approach to time series AI will not be sufficient. However, for developers, this can make building a product complex, as it comes with the challenge of exploring and evaluating many algorithms and approaches.

A fundamental challenge of time series is the data itself. The shape and length are usually variable and can even be infinite (real-time streams of data). The volume of data required is often too much for simple and efficient machine learning algorithms such as Decision Trees. This challenge makes Deep Learning popular to process such data. There are several types of neural networks that have been shown to work well with time series so let’s review some of the common classes:

While not a complete representation of classes of neural networks, this list represents the areas of the most potential for Spice.ai’s time-series AI technology. We also see other interesting paradigms to explore when improving the core technology like Memory Augmented Neural Networks (MANN) or neural network-based Genetical Algorithms.

Reinforcement Learning (RL) has grown steadily, especially in fields like robotics. Usually, RL doesn’t require as much data processing as Supervised Learning, where large datasets can be demanding for hardware and people alike. RL is more dynamic: agents aren’t trained to replicate a specific behaviors/output but explore and ’exploit’ their environment to maximize a given reward.

Most of today’s research is based on environments the agent can interact with during the training process, known as online learning. Usually, efficient training processes have multiple agent/environment pairs training together and sharing their experiences. Having an environment for agents to interact enables different actions from the actual historical state known as on-policy learning, and using only past experiences without an environment is off-policy learning.

Spice.ai is initially taking an off-policy approach, where an environment (either pre-made or given by the user) is not required. Despite limiting the exploration of agents, this aligns to an application-centric approach as:

The Spice.ai approach to time series AI can be described as ‘Data-Driven’ Reinforcement Learning. This domain is very exciting, and we are building upon excellent research that is being published. The Berkeley Artificial Intelligence Research’s blog shows the potential of this field and many other research entities that have made great discoveries like DeepMind, Open AI, Facebook AI and Google AI (among many others). We are inspired and are building upon all the research in Reinforcement Learning to develop core Spice.ai technology.

If you are interested in Reinforcement Learning, we recommend following these blogs, and if you’d like to partner with us on the mission of making it easier to build intelligent applications by leveraging RL, we invite you to discuss with us on Discord, reach out on Twitter or email us.

Corentin

We are excited to announce the release of Spice.ai v0.4-alpha! 🏄♂️

Highlights include support for authoring reward functions in a code file, the ability to specify the time of recommendation, and ingestion support for transaction/correlation ids. Authoring reward functions in a code file is a significant improvement to the developer experience than specifying functions inline in the YAML manifest, and we are looking forward to your feedback on it!

If you are new to Spice.ai, check out the getting started guide and star spiceai/spiceai on GitHub.

The spice upgrade command was added in the v0.3.1-alpha release, so you can now upgrade from v0.3.1 to v0.4 by simply running spice upgrade in your terminal. Special thanks to community member @Adm28 for contributing this feature!

In addition to defining reward code inline, it is now possible to author reward code in functions in a separate Python file.

The reward function file path is defined by the reward_funcs property.

A function defined in the code file is mapped to an action by authoring its name in the with property of the relevant reward.

Example:

training:

reward_funcs: my_reward.py

rewards:

- reward: buy

with: buy_reward

- reward: sell

with: sell_reward

- reward: hold

with: hold_reward

Learn more in the documentation: docs.spiceai.org/concepts/rewards/external

Spice.ai can now learn from cyclical patterns, such as daily, weekly, or monthly cycles.

To enable automatic cyclical field generation from the observation time, specify one or more time categories in the pod manifest, such as a month or weekday in the time section.

For example, by specifying month the Spice.ai engine automatically creates a field in the AI engine data stream called time_month_{month} with the value calculated from the month of which that timestamp relates.

Example:

time:

categories:

- month

- dayofweek

Supported category values are:

month dayofmonth dayofweek hour

Learn more in the documentation: docs.spiceai.org/reference/pod/#time

It is now possible to specify the time of recommendations fetched from the /recommendation API.

Valid times are from pod epoch_time to epoch_time + period.

Previously the API only supported recommendations based on the time of the last ingested observation.

Requests are made in the following format: GET http://localhost:8000/api/v0.1/pods/{pod}/recommendation?time={unix_timestamp}

An example for quickstarts/trader

GET http://localhost:8000/api/v0.1/pods/trader/recommendation?time=1605729600

Specifying {unix_timestamp} as 0 will return a recommendation based on the latest data. An invalid {unix_timestamp} will return a result that has the valid time range in the error message:

{

"response": {

"result": "invalid_recommendation_time",

"message": "The time specified (1610060201) is outside of the allowed range: (1610057600, 1610060200)",

"error": true

}

}

order_id, trace_id) in the pod manifest.training section is not included in the manifest.Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Discord or by email to get involved. We will also be starting a community call series soon!

The last post in this series, Making Apps that Learn and Adapt, described the shift from building AI/ML solutions to building apps that learn and adapt. But, how does the app learn? And as a developer, how do I teach it what it should learn?

With Spice.ai, we teach the app how to learn using a Spicepod.